Blog

Distance Time Velocity Time Graph

Distance Time Velocity Time Graph: A “Distance-Time Velocity-Time Graph,” often referred to as a “velocity-time graph” or “speed-time graph,” is a graphical representation that illustrates how an object’s velocity or speed changes over time. Here’s how to understand and interpret such a graph:

Distance Time Velocity Time Graph

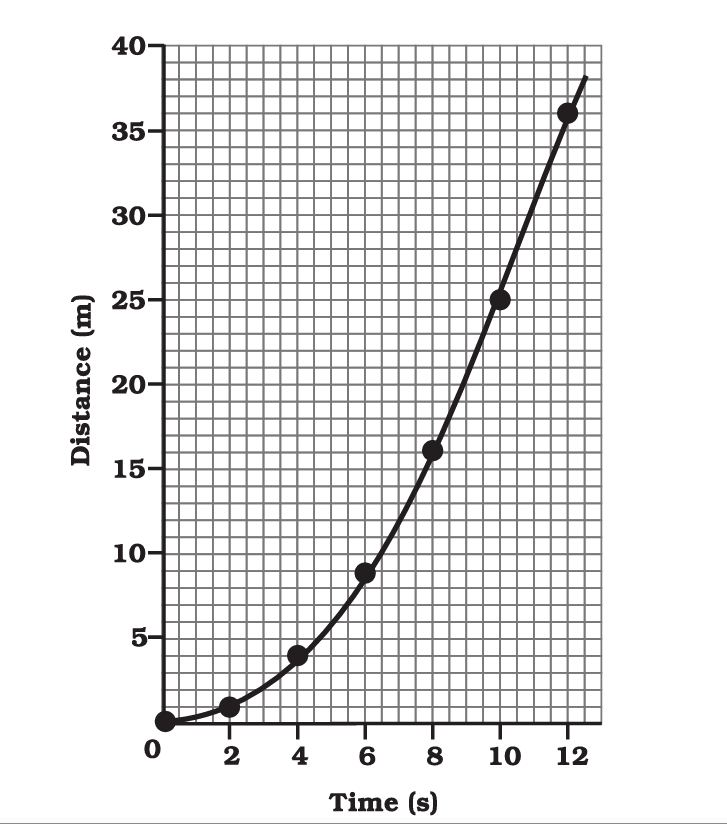

Distance-Time Graph:

- On the horizontal axis (x-axis), you’ll find time, typically measured in seconds (s).

- On the vertical axis (y-axis), you’ll find distance or displacement, typically measured in meters (m) or kilometers (km).

- A straight line on a distance-time graph represents constant velocity or speed. The slope of the line indicates the velocity.

- A horizontal line indicates that the object is at rest (not moving).

- An upward-sloping line indicates positive velocity (moving away from the starting point).

- A downward-sloping line indicates negative velocity (moving toward the starting point).

- Curved lines represent changing velocities or acceleration.

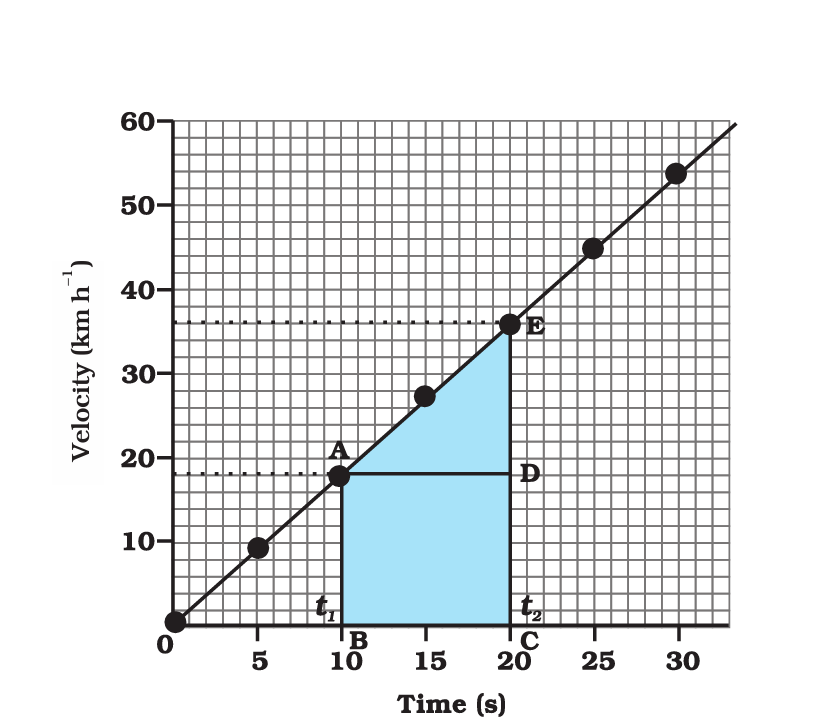

Velocity-Time Graph:

- On the horizontal axis (x-axis), you’ll find time, typically measured in seconds (s).

- On the vertical axis (y-axis), you’ll find velocity or speed, typically measured in meters per second (m/s) or kilometers per hour (km/h).

- A straight line on a velocity-time graph represents constant acceleration or deceleration.

- The slope of the line indicates acceleration (positive or negative) when the line is not horizontal.

- A horizontal line represents constant velocity (no acceleration).

- Areas under the curve represent displacement or distance traveled. The area between the graph and the time axis indicates the total distance covered.

- Points where the velocity-time graph crosses the time axis represent moments when the object is at rest (velocity = 0).

Interpreting the Graphs:

- On a distance-time graph, the steeper the slope, the greater the speed or velocity.

- On a velocity-time graph, the slope indicates acceleration. Positive slopes indicate acceleration in the positive direction, while negative slopes indicate acceleration in the negative direction.

- A horizontal line on a velocity-time graph implies constant speed.

- The area under a velocity-time graph curve represents the change in distance or displacement.

In summary, a distance-time graph shows how an object’s position changes with time, while a velocity-time graph reveals how an object’s velocity or speed changes with time.

Read More

- Average Speed And Average Velocity Class 9

- Differences Between Acceleration And Velocity

- Matter In Our Surroundings Class 9 Summary

- Class 9th Chapter 2 Science Question Answer of NCERT

- NCERT Physics Class 9 Chapter 1 Solutions PDF Download

Frequently Asked Question (FAQs) on Distance Time Velocity Time Graphs:

What is a distance-time graph, and how is it used?

A distance-time graph illustrates how an object’s position or distance changes over time. It’s used to visualize an object’s motion, calculate its speed, and understand whether it is moving, at rest, or changing its speed.

How do you interpret a straight line on a distance-time graph?

A straight line on a distance-time graph represents constant speed. The slope of the line indicates the speed, with a steeper slope representing a greater speed.

What does a horizontal line on a distance-time graph signify?

A horizontal line indicates that the object is at rest or not changing its position with time.

What is a velocity-time graph, and what does it show?

A velocity-time graph depicts how an object’s velocity (speed and direction) changes over time. It provides insights into an object’s acceleration, constant velocity, and moments of rest.

How is acceleration represented on a velocity-time graph?

Acceleration is represented by the slope of a line on a velocity-time graph. A positive slope indicates acceleration in the positive direction, while a negative slope represents acceleration in the negative direction.

Difference Between Kinetics And Kinematics

Difference Between Kinetics And Kinematics: Kinetics and kinematics are both subfields of physics that encompass the study of an object’s motion. While these terms may appear similar, they do exhibit distinct differences.

Difference Between Kinetics And Kinematics

Nature of Study:

Kinematics: Kinematics is the branch of physics that deals with the study of motion of objects without considering the forces causing that motion. It focuses on describing the position, velocity, and acceleration of objects as they move through space and time.

Kinetics: Kinetics, on the other hand, is the branch of physics that delves into the causes of motion. It is concerned with the analysis of the forces, torques, and energy transfers that lead to changes in an object’s motion.

Fundamental Concepts:

Kinematics: Kinematics primarily deals with concepts like displacement, velocity, acceleration, and time. It provides a framework for describing how objects move and change position relative to time.

Kinetics: Kinetics is centered on concepts like force, mass, inertia, work, energy, and momentum. It investigates how these factors interact to produce or change motion.

Representation:

Kinematics: Kinematics is often represented mathematically through equations and graphs that describe an object’s motion in terms of position, velocity, and acceleration as functions of time.

Kinetics: Kinetics involves the use of Newton’s laws of motion, equations of motion, and various mathematical principles to analyze and predict the forces acting on objects and their resulting motion.

Key Questions:

Kinematics: Kinematics answers questions like “What is the object’s position at a given time?” or “How fast is the object moving?” It focuses on the “what” and “how” of motion.

Kinetics: Kinetics addresses questions like “Why is the object accelerating?” or “What force is causing the object to move?” It investigates the underlying causes and the “why” of motion.

Example:

Kinematics: When describing the motion of a car on a highway, kinematics would provide information about the car’s speed, its change in position over time, and how its velocity is changing.

Kinetics: Kinetics, in the context of the same car, would analyze the forces acting on it, such as engine force, friction, and air resistance, to explain why the car is accelerating, decelerating, or maintaining a constant speed.

In summary, kinematics is concerned with describing the motion of objects and their geometric characteristics (position, velocity, acceleration) without considering the underlying forces.

Kinetics, on the other hand, focuses on understanding the forces and interactions that cause objects to move or change their state of motion. Both branches are essential in the study of mechanics and the analysis of physical systems.

Read More

- Changing States Of Matter Class 9

- Average Speed And Average Velocity Class 9

- Differences Between Acceleration And Velocity

- Matter In Our Surroundings Class 9 Summary

- Class 9th Chapter 2 Science Question Answer of NCERT

Frequently Asked Question (FAQs)

What is the primary focus of kinematics?

Kinematics primarily focuses on describing the motion of objects, including parameters such as position, velocity, acceleration, and time, without considering the forces causing that motion.

How does kinetics differ from kinematics in terms of focus?

Kinetics is concerned with understanding the causes of motion, particularly the forces, torques, and energy transfers that lead to changes in an object’s motion.

Can you provide an example illustrating the difference between kinetics and kinematics?

Certainly. Consider a car moving along a road. Kinematics would describe its speed, position changes, and acceleration patterns. Kinetics, on the other hand, would analyze the forces responsible for the car’s motion, such as engine force, friction, and air resistance.

What are some fundamental concepts in kinematics?

In kinematics, fundamental concepts include displacement, velocity, acceleration, and time. These concepts help describe how objects move without delving into the causes of motion.

What key concepts are involved in kinetics?

Kinetics involves concepts like force, mass, inertia, work, energy, and momentum. It investigates how these factors interact to produce or change motion and explores the underlying causes of motion.

Difference Between Force And Pressure

Difference Between Force And Pressure: Significant disparities exist between force and pressure, despite both being fundamental concepts in physics. To grasp this contrast, it’s essential to comprehend their definitions and applications.

Force involves push and pull actions leading to alterations in motion and direction, while pressure quantifies the physical force distributed over a specific area.

Definition:

Force: Force is a vector quantity that represents a push or pull exerted on an object due to its interaction with another object. It has both magnitude and direction and is measured in newtons (N).

Pressure: Pressure is a scalar quantity that measures the intensity of a force applied to a specific area. It is the force per unit area and is expressed in units such as pascals (Pa) or pounds per square inch (psi).

Nature:

Force: Force is a fundamental concept in physics and can manifest as a push or pull along a straight line or at an angle. It acts on a specific point or object and can result in motion or deformation.

Pressure: Pressure is the distribution of force over a given area. It doesn’t act on a specific point but rather is spread out uniformly over the surface. It describes how force is distributed over an area.

Vector vs. Scalar:

Force: Force is a vector quantity because it has both magnitude and direction. It is represented by an arrow indicating the direction of the push or pull.

Pressure: Pressure is a scalar quantity as it has magnitude only. It does not have a specific direction associated with it.

Units:

Force: Force is measured in newtons (N) in the International System of Units (SI) and can also be expressed in other units like pounds or dynes.

Pressure: Pressure is measured in pascals (Pa) in SI units. In other systems, it can be measured in pounds per square inch (psi) or atmospheres (atm).

Formula:

Force: The formula for force is F = ma, where F represents force, m is mass, and a is acceleration. It can also be calculated as the rate of change of momentum, F = Δp/Δt.

Pressure: Pressure is calculated using the formula P = F/A, where P represents pressure, F is the force applied, and A is the area over which the force is distributed.

Effect on Objects:

Force: A force can result in the motion of an object or changes in its shape or velocity. It can cause acceleration or deformation.

Pressure: Pressure affects the deformation of an object or the behavior of fluids (liquids and gases) within containers. It does not necessarily cause motion but can lead to changes in the state or shape of matter.

Examples:

Force: Examples of forces include lifting a book, pushing a car, or pulling an object with a rope.

Pressure: Examples of pressure include the pressure exerted by a gas in a closed container, atmospheric pressure on Earth’s surface, and the pressure applied by a thumbtack on a bulletin board.

In summary, force is a vector quantity representing a push or pull with magnitude and direction, while pressure is a scalar quantity representing the distribution of force over a specific area.

Read More

- Class 10 Geography Book NCERT PDF in English Download

- 10th Class History Textbook PDF English Medium NCERT Download

- Class 10 Social Science Book Pdf Download In Hindi

- NCERT Political Science Class 10 Pdf Download In Hindi

- NCERT Books For Class 10 Economics In Hindi Medium PDF

Frequently Asked Question (FAQs)

What is the fundamental difference between force and pressure?

The fundamental difference lies in their nature. Force is a vector quantity with both magnitude and direction, representing a push or pull on an object. Pressure, on the other hand, is a scalar quantity that measures the intensity of force distributed over an area.

How is force measured?

Force is typically measured in newtons (N) in the International System of Units (SI). It can also be expressed in other units like pounds or dynes.

What are some real-world examples of force?

Examples of forces include lifting objects, pushing or pulling a car, gravitational forces, and tension in ropes or cables.

How is pressure calculated?

Pressure is calculated using the formula P = F/A, where P represents pressure, F is the force applied, and A is the area over which the force is distributed.

Is pressure a vector or scalar quantity?

Pressure is a scalar quantity, as it has magnitude but does not have a specific direction associated with it.

Difference Between Diode And Rectifier

Difference Between Diodes and Rectifiers: Diodes and rectifiers are electronic components commonly used in electrical circuits, but they serve different purposes. Here are the key differences between a diode and a rectifier:

Difference Between Diode And Rectifier

Function:

Diode: A diode is a two-terminal semiconductor device that primarily allows the flow of electric current in one direction while blocking it in the other direction.

It serves as a one-way valve for electrical current and is often used for tasks such as voltage regulation, signal demodulation, and signal protection.

Rectifier: A rectifier is a circuit or a device (usually consisting of multiple diodes) that converts alternating current (AC) into direct current (DC).

Rectifiers are specifically designed to rectify or convert the polarity of an AC signal, ensuring that the output current flows predominantly in one direction.

Number of Terminals:

Diode: A diode has two terminals: an anode (positive) and a cathode (negative). It allows current to flow from the anode to the cathode when forward-biased and blocks current when reverse-biased.

Rectifier: A rectifier typically consists of multiple diodes arranged in a specific configuration, such as a bridge rectifier or a full-wave rectifier. These rectifiers can have more than two terminals, depending on their design.

Typical Applications:

Diode: Diodes find applications in a wide range of electronic circuits, including voltage clamping, signal rectification, signal modulation, and protection against reverse voltage.

Rectifier: Rectifiers are specifically used to convert AC to DC. They are essential components in power supplies, battery chargers, and devices that require a constant source of DC voltage.

Direction of Current:

Diode: A diode allows current to flow in one direction (forward bias) while blocking it in the opposite direction (reverse bias).

Rectifier: A rectifier ensures that the output current flows predominantly in one direction, converting the AC input into a DC output.

Symbol:

Diode: The symbol for a diode typically consists of an arrow pointing towards a vertical line, representing the anode and cathode.

Rectifier: Rectifier symbols vary depending on the specific configuration but often include multiple diode symbols connected in a bridge-like pattern.

In summary, a diode is a fundamental two-terminal semiconductor device that allows current flow in one direction, while a rectifier is a circuit or device that utilizes diodes to convert alternating current (AC) into direct current (DC).

Rectifiers are specialized for converting the polarity of AC signals, making them a subset of diodes designed for a specific purpose.

Read More

- Basic And Standard Maths In Class 10 Sample Paper PDF

- CBSE Sample Papers for Class 11 English With Answers PDF

- CBSE Class 10 Basic Maths Question Paper 2020 With Solutions

- CBSE Social Science Class 10 Question Paper 2020 With Answers

- 10th Class All Subject Books Hindi Medium NCERT PDF Download

Frequently Asked Question (FAQs)

What is the primary function of a diode?

The primary function of a diode is to allow electric current to flow in one direction (forward bias) while blocking it in the opposite direction (reverse bias). It acts as an electronic check valve.

Can a diode be used to convert AC to DC like a rectifier?

No, a diode by itself cannot convert AC to DC. While it rectifies AC by allowing only one half of the AC cycle to pass, it does not convert the entire AC waveform into a smooth DC signal. A rectifier, which often includes multiple diodes, is needed for complete AC-to-DC conversion.

What are the terminals of a diode called?

A diode has two terminals: an anode (positive) and a cathode (negative).

What is the primary function of a rectifier?

The primary function of a rectifier is to convert alternating current (AC) into direct current (DC). It ensures that the output current flows predominantly in one direction.

Are rectifiers composed of multiple diodes?

Yes, rectifiers are often constructed using multiple diodes connected in specific configurations, such as bridge rectifiers or full-wave rectifiers. These diodes work together to achieve the AC-to-DC conversion.

Difference Between AM And FM

Difference Between AM and FM: AM (Amplitude Modulation) and FM (Frequency Modulation) are two common methods used for transmitting information through radio waves.

They differ in how they encode and transmit signals. Here’s a comparison of the key differences between AM and Frequency modulation:

Difference Between AM And FM

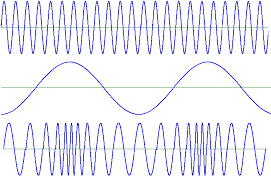

Modulation Technique:

AM: In AM, the amplitude (strength) of the carrier wave varies in proportion to the instantaneous amplitude of the modulating signal (e.g., audio signal). This means that the amplitude of the carrier wave is modulated to carry the information.

FM: In FM, the frequency of the carrier wave varies in proportion to the instantaneous frequency of the modulating signal. In other words, the frequency of the carrier wave changes as it carries the information.

Sensitivity to Interference:

AM: AM signals are more susceptible to amplitude variations due to interference, such as atmospheric disturbances and electrical noise. This can result in audible static and interference.

FM: Frequency modulation signals are less sensitive to amplitude variations but are more sensitive to frequency variations. As a result, they are generally less prone to interference, providing clearer audio quality.

Bandwidth:

AM: AM signals have a narrower bandwidth compared to Frequency modulation signals. This means that AM stations can be placed closer together on the radio dial but have a limited audio bandwidth.

FM: Frequency modulation signals have a wider bandwidth, allowing for higher-fidelity audio transmission. However, this wider bandwidth requires Frequency modulation stations to be spaced farther apart on the dial to prevent interference.

Signal Quality:

AM: AM signals are known for their simplicity and are suitable for transmitting voice and news. However, they have lower audio quality compared to FM and are more susceptible to noise.

FM: FM signals offer superior audio quality, making them ideal for music transmission. They are less prone to noise and interference, resulting in clear and high-fidelity sound.

Range and Coverage:

AM: AM signals can travel longer distances and provide better coverage at night due to changes in the ionosphere, which reflects AM signals. This makes AM suitable for long-range communication.

FM: FM signals have a shorter effective range and are less affected by changes in the ionosphere. They are typically used for local and regional broadcasting.

Applications:

AM: AM is commonly used for broadcasting news, talk radio, and voice transmissions, especially in AM radio stations.

FM: Frequency modulation is preferred for music broadcasting, including Frequency modulation radio stations, and is used in applications like two-way radio communication.

In summary, AM and FM are two distinct modulation techniques used in radio communication, each with its own set of advantages and disadvantages. AM is known for its longer range and simplicity, while Frequency modulation offers better audio quality and resistance to interference. The choice between AM and Frequency modulation depends on the specific requirements of the communication system and the type of content being transmitted.

Read More

- Dielectric Material And Dipole Moment

- Difference Between Centre Of Gravity And Centroid

- Kinetic Gas Equation Derivation

- Electromagnetic Waves Transverse Nature Class 10

- Difference Between KVA And KW

Frequently Asked Question (FAQs) on Difference Between AM And FM

What is AM and FM?

AM stands for Amplitude Modulation, and Frequency modulation stands for Frequency Modulation. They are two different methods of modulating radio waves to transmit information.

How do AM and FM differ in modulation technique?

In AM, the amplitude of the carrier wave varies with the information signal, while in Frequency modulation, the frequency of the carrier wave changes with the information signal.

Which has better audio quality, AM or FM?

Frequency modulation has better audio quality compared to AM. Frequency modulation signals are less prone to noise and interference, resulting in clearer and higher-fidelity sound.

Are AM and FM signals equally susceptible to interference?

No, AM signals are more susceptible to amplitude variations due to interference, while Frequency modulation signals are less sensitive to amplitude variations but more sensitive to frequency variations.

Why are AM signals more suitable for long-range communication?

AM signals can travel longer distances due to their ability to bounce off the ionosphere, especially at night. This property makes AM suitable for long-range communication.

Dielectric Material And Dipole Moment

Dielectric Material And Dipole Moment: In this piece, we will explore the distinguishing features of polar and non-polar materials.

Dielectric Material And Dipole Moment

Insulators and Dielectric Materials:

Insulators:

Insulators, also known as non-conductors, are materials that impede the flow of electric current. Unlike conductors, which allow the movement of electric charges, insulators have electrons tightly bound to their atoms or molecules.

This strong binding prevents the easy flow of electrons, resulting in a lack of conductivity. Insulators are commonly used to isolate or separate conductive materials, thereby preventing the leakage of electrical current and ensuring safety in various applications.

Examples of insulators include rubber, plastic, glass, ceramic, and wood. These materials are often utilized in electrical wiring, electronics, and construction to prevent unintentional electrical contact.

Dielectric Materials:

Dielectric materials share similarities with insulators, but they have a distinct focus on their behavior in response to electric fields. When placed in an electric field, dielectric materials become polarized, meaning their electrons shift slightly in response to the applied electric field.

This polarization creates temporary dipoles within the material. While dielectric materials hinder the flow of electric current, their ability to polarize in the presence of an electric field is essential in various applications, particularly in capacitors.

Dielectric materials are used to separate the plates of a capacitor, where they store electric energy due to the accumulation of charges on their surfaces.

This stored energy can then be released when needed. Dielectrics increase the capacitance of a capacitor by allowing it to store more charge for a given voltage.

In summary, insulators prevent the flow of electric current by binding electrons tightly to atoms or molecules, while dielectric materials exhibit polarization in response to electric fields, making them valuable for energy storage and manipulation in devices like capacitors. Both insulators and dielectric materials are vital components in modern technology and electrical engineering.

Polar and Non-Polar Molecules:

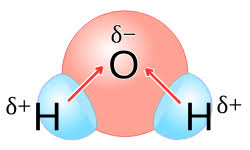

Polar Molecules:

Polar molecules are those in which the distribution of electrical charge is uneven, resulting in a separation of positive and negative charges within the molecule. This charge separation gives rise to a molecular dipole moment, creating a positive end (where the positive charge is concentrated) and a negative end (where the negative charge is concentrated) within the molecule.

Polarity in molecules is primarily influenced by the electronegativity difference between atoms and the molecular geometry. When atoms with differing electronegativities are bonded, the more electronegative atom tends to attract electrons more strongly, leading to an uneven charge distribution. Water (H2O) is a classic example of a polar molecule, with its bent shape and unequal sharing of electrons.

Polar molecules interact strongly with other polar molecules due to the attraction between their opposite charges. This phenomenon is essential in various biological and chemical processes, such as dissolving polar substances in water.

Non-Polar Molecules:

Non-polar molecules are characterized by an even distribution of electrical charge, resulting in no significant dipole moment. This usually occurs when atoms within the molecule have similar or very close electronegativities, leading to a balanced sharing of electrons.

Examples of non-polar molecules include diatomic gases like oxygen (O2) and nitrogen (N2), as well as hydrocarbons like methane (CH4) and ethane (C2H6). These molecules often exhibit symmetrical geometry, and the electronegativity difference between their constituent atoms is negligible.

Non-polar molecules tend to interact weakly with other non-polar molecules due to the absence of significant charge differences. This behavior is evident in processes like the dispersion forces between non-polar molecules, which contribute to the cohesion of substances like oils and gases.

In summary, polar molecules have an uneven distribution of charges, leading to a dipole moment and strong interactions with other polar molecules, while non-polar molecules exhibit an even charge distribution, resulting in weak interactions with other non-polar molecules. Understanding the polarity of molecules is crucial in explaining a wide range of chemical and physical properties.

Read More

- CBSE Class 10 Basic Maths Question Paper 2020 With Solutions

- CBSE Social Science Class 10 Question Paper 2020 With Answers

- 10th Class All Subject Books Hindi Medium NCERT PDF Download

- Class 10 All Subjects Books NCERT PDF Download in English

- CBSE Class 10 Sample Question Paper Social Science PDF

Frequently Asked Question (FAQs)

What is a dielectric material?

A dielectric material is an insulating substance that does not conduct electric current easily. It exhibits polarization when exposed to an electric field, causing shifts in the distribution of charges within the material without allowing the movement of charges.

How do dielectric materials behave in an electric field?

Dielectric materials become polarized when subjected to an electric field. The electrons within the material experience slight displacements, leading to induced dipoles. This polarization effect enhances the ability of dielectric materials to store electric energy in devices like capacitors.

What is the role of dielectric materials in capacitors?

Dielectric materials are used in capacitors to separate the electrically conductive plates. The polarization of the dielectric material increases the capacitance of the capacitor, allowing it to store more electric charge for a given voltage.

How is a dipole moment defined?

A dipole moment is a measure of the separation of positive and negative charges within a molecule or system. It indicates the strength and direction of the electric dipole created due to the charge separation.

What causes the creation of a dipole moment in a molecule?

A dipole moment arises when there is an uneven distribution of electrical charge within a molecule. This can result from differences in electronegativity between atoms within the molecule, leading to partial positive and negative charges.

Difference Between Centre Of Gravity And Centroid

Difference Between Centre Of Gravity And Centroid: The terms “center of gravity” and “centroid” are often used in various fields, such as physics, engineering, and mathematics, to describe different concepts related to the distribution of mass or geometric properties.

Although they might seem similar, they have distinct meanings and applications. Here’s a breakdown of the differences between the two:

Difference Between Centre Of Gravity And Centroid

Definition and Concept:

Centre of Gravity: The center of gravity of an object is the point at which the entire weight of the object can be considered to act. In other words, it is the point around which the object’s weight is evenly balanced in all directions.

It is the point where the gravitational force acts on the object, and if supported at this point, the object will be in equilibrium.

Centroid: The centroid of a geometric shape (such as a two-dimensional figure or a three-dimensional object) is the point at which the shape could be perfectly balanced if it were made of a uniform material.

It’s often considered as the geometric center or average position of all the points that make up the shape, without considering their masses or weights.

Application:

Centre of Gravity: The concept of center of gravity is primarily used in the study of mechanics and engineering to analyze the stability and equilibrium of objects.

For example, in architecture and design, understanding the center of gravity is crucial to ensure the stability of structures and prevent them from toppling over.

Centroid: The concept of centroid is used in mathematics, geometry, and various engineering fields to determine properties such as the geometric center, moment of inertia, and other geometric characteristics of shapes. It’s essential for calculating moments, areas, and volumes in design and analysis.

Calculation:

Centre of Gravity: Calculating the center of gravity involves considering the distribution of mass within an object and determining the point where the weight is balanced.

This calculation can be complex for irregularly shaped objects or those with varying densities.

Centroid: The centroid of a shape is often calculated using specific formulas that take into account the coordinates of the vertices or points that define the shape.

These formulas vary depending on the type of shape being analyzed (e.g., triangles, rectangles, circles).

Symmetry:

Centre of Gravity: The center of gravity may not necessarily coincide with the geometric center of an object, especially if the mass distribution is not uniform or if there are external forces acting on the object.

Centroid: The centroid is often located at the geometric center of regular shapes with uniform distribution of points. In irregular shapes, the centroid can be determined based on the specific properties of the shape.

In summary, the center of gravity relates to the distribution of weight and is essential for understanding the stability of objects, while the centroid focuses on the geometric center of shapes and aids in calculating various geometric properties.

Read More

- Kinetic Gas Equation Derivation

- Electromagnetic Waves Transverse Nature Class 10

- Difference Between KVA And KW

- Difference Between LCD And LED

- Electric Potential of a Point Charge Class 10

Frequently Asked Questions (FAQs)

What is the centre of gravity?

The centre of gravity is the point within an object where the entire weight of the object can be considered to act. It’s the point where the gravitational force effectively acts on the object, determining its equilibrium and stability.

What is the centroid?

The centroid is the geometric center or average position of all the points that make up a shape, without considering their masses or weights. It’s a point used to define the balance and geometric properties of a shape.

How are these concepts used in physics and engineering?

The center of gravity is used in physics and engineering to analyze the stability and balance of objects. It helps determine how external forces will affect an object’s equilibrium. The centroid, on the other hand, is used to calculate various geometric properties of shapes, such as moment of inertia, center of mass, and distribution of areas or volumes.

Are the center of gravity and centroid always the same point?

No, they are not the same. The center of gravity may not coincide with the centroid, especially in cases of non-uniform mass distribution or when external forces are acting on the object. The centroid is primarily a geometric property and may not be affected by mass distribution.

How are the center of gravity and centroid calculated?

Calculating the center of gravity involves considering the distribution of mass within an object and finding the point where the weight is balanced. The centroid of a shape is calculated using specific geometric formulas based on the coordinates of the shape’s vertices or points.

Kinetic Gas Equation Derivation

Kinetic Gas Equation Derivation: The kinetic gas equation, also known as the ideal gas law, describes the behavior of an ideal gas under various conditions.

It relates the pressure, volume, and temperature of a gas to the number of gas molecules and the ideal gas constant. Here, we’ll outline the derivation of the kinetic gas equation step by step.

Kinetic Gas Equation Derivation

Step 1: Understanding Assumptions

The ideal gas law is based on several assumptions:

- Gas molecules are considered to be point masses with no volume.

- Gas molecules undergo elastic collisions with each other and the container walls.

- There are no intermolecular forces between gas molecules.

- The volume occupied by the gas molecules themselves is negligible compared to the container’s volume.

Step 2: Defining Pressure and Force

Pressure (P) is defined as force (F) per unit area (A):

P = F/A

Step 3: Force Due to Collisions

Consider a gas molecule colliding with a container wall. The change in momentum during the collision generates a force on the wall. The average force is given by Newton’s second law as:

Where:

- ∆m is the change in momentum of the molecule,

- v_x is the x-component of the velocity of the molecule, and

- ∆t is the time between successive collisions.

Step 4: Relating Force to Pressure

Substituting the force expression into the pressure definition gives:

Step 5: Relating Velocity and Temperature

The average kinetic energy of a gas molecule is proportional to its temperature (T) in Kelvin: 3/2= 1/2

Where:

- k is the Boltzmann constant,

- m is the mass of a gas molecule,

- v is the root-mean-square velocity of the molecules.

Step 6: Substituting Velocity into Pressure Expression

Substitute the expression for velocity (v) from the kinetic energy equation into the pressure equation:

![]()

Step 7: Molecule Count

The number of molecules per unit volume (n/V) is defined as the number density: Where N is the total number of molecules in the volume V.

Step 8: Combining Equations

Substitute the expression for the number density into the pressure equation:

Step 9: Introducing Ideal Gas Constant

The ideal gas constant (R) is defined as:

Step 10: Arriving at the Kinetic Gas Equation

Substitute the ideal gas constant into the equation:

![]()

This equation simplifies to:

![]()

Since 2 is the average kinetic energy ( = 3/2):

This is the kinetic gas equation, also known as the ideal gas law:

Where:

- P is pressure,

- V is volume,

- n is the number of moles of gas,

- R is the ideal gas constant,

- T is temperature.

The kinetic gas equation describes the behavior of ideal gases under various conditions, providing insights into their macroscopic properties based on the motion of their constituent particles.

Read More

- Electromagnetic Waves Transverse Nature Class 10

- Difference Between KVA And KW

- Difference Between LCD And LED

- Electric Potential of a Point Charge Class 10

- Difference Between In Physics

Frequently Asked Question (FEQs)

What is the kinetic gas equation, also known as the ideal gas law?

The kinetic gas equation, often referred to as the ideal gas law, is a fundamental equation in thermodynamics that describes the relationship between the pressure (P), volume (V), temperature (T), and amount of gas (n) in a system. It is represented by the equation PV = nRT, where R is the ideal gas constant.

Why is the ideal gas law also called the kinetic gas equation?

The ideal gas law is sometimes called the kinetic gas equation because it is derived based on the kinetic theory of gases, which considers the motion of gas molecules and their collisions.

What are the assumptions made in the derivation of the kinetic gas equation?

The derivation of the kinetic gas equation is based on the following assumptions:

- Gas molecules are considered to be point masses with no volume.

- Gas molecules undergo perfectly elastic collisions with each other and the container walls.

- There are no intermolecular forces between gas molecules.

- The volume occupied by the gas molecules is negligible compared to the container’s volume.

How does the kinetic gas equation relate pressure, volume, temperature, and the number of gas molecules?

The kinetic gas equation, PV = nRT, establishes a direct relationship between the pressure, volume, temperature, and number of moles of gas in a system. It implies that for a given amount of gas, an increase in temperature or volume results in a proportional increase in pressure.

What role does the ideal gas constant (R) play in the equation?

The ideal gas constant (R) is a proportionality constant that relates the physical properties of a gas to the equation. It provides a bridge between the microscopic behavior of gas molecules and the macroscopic properties described by pressure, volume, temperature, and amount of gas.

Electromagnetic Waves Transverse Nature

Electromagnetic Waves Transverse Nature: Electromagnetic waves are a fundamental aspect of the electromagnetic spectrum, encompassing phenomena such as radio waves, microwaves, visible light, and more.

One of the distinguishing characteristics of electromagnetic waves is their transverse nature, which sets them apart from other types of waves.

Transverse Waves: A Quick Overview

Transverse waves are a type of wave in which the oscillations of the wave propagate perpendicular to the direction of the wave’s motion. This means that while the wave itself travels in one direction, the individual particles or fields oscillate at right angles to that direction.

Understanding Electromagnetic Waves:

- Electromagnetic Field Oscillations: Electromagnetic waves are composed of electric and magnetic fields that oscillate as the wave propagates. These fields are perpendicular to each other and to the direction of wave motion.

- Perpendicular Oscillations: In an electromagnetic wave, the electric field oscillates in one plane, while the magnetic field oscillates in a plane perpendicular to the electric field. Both fields are orthogonal to the direction of wave propagation.

- Light as an Example: Visible light, which is a form of electromagnetic wave, demonstrates this transverse nature. The oscillating electric and magnetic fields give rise to the characteristic properties of light, such as polarization and interference.

Key Features of Transverse Nature:

- Polarization: The transverse nature of electromagnetic waves allows for polarization. Polarization refers to the orientation of the oscillations of the electric and magnetic fields in a specific direction perpendicular to the wave’s motion.

- Propagation in Empty Space: Electromagnetic waves can propagate through a vacuum, as they do not require a medium for their transmission. This characteristic is a result of their transverse nature.

- Interference and Diffraction: The transverse nature of electromagnetic waves enables them to exhibit interference and diffraction patterns, which are phenomena observed when waves interact with each other or encounter obstacles.

Electromagnetic Waves and Transverse Motion:

- Electric and Magnetic Fields: Electromagnetic waves consist of varying electric and magnetic fields. As the wave travels, these fields oscillate in directions perpendicular to each other and to the direction of the wave.

- Perpendicular Oscillations: The electric field oscillates in a plane perpendicular to the magnetic field, and both are at right angles to the direction of wave propagation. This unique oscillation pattern gives electromagnetic waves their transverse nature.

- Visible Light Example: Consider visible light, a form of electromagnetic wave. The varying electric and magnetic fields give rise to the different colors and properties of light. The transverse oscillations are responsible for phenomena like polarization and color dispersion.

Significance and Characteristics:

- Polarization: The transverse nature allows electromagnetic waves to be polarized. Polarization refers to the alignment of the oscillations in a specific direction. Polarized sunglasses, for instance, filter out certain orientations of light waves to reduce glare.

- Propagation in a Vacuum: Electromagnetic waves can travel through a vacuum, devoid of any material medium. This is due to their transverse nature, which doesn’t require particles to oscillate in the wave’s direction.

- Interference and Diffraction: Transverse waves exhibit interference and diffraction patterns when they interact with each other or encounter obstacles. These patterns result from the superposition of waves with different phases and amplitudes.

Applications:

The transverse nature of electromagnetic waves is at the core of various technological applications, including wireless communication, radio broadcasting, television transmission, and fiber-optic communication. Understanding how these waves oscillate perpendicular to their motion helps engineers and scientists harness their properties for a wide range of purposes.

In summary, the transverse nature of electromagnetic waves is a defining characteristic that governs their behavior, propagation, and applications. This unique feature plays a pivotal role in our ability to communicate, observe the universe, and develop technologies that shape modern society.

Read More

- Kinetic Gas Equation Derivation

- Difference Between KVA And KW

- Difference Between LCD And LED

- Difference Between In Physics

Frequently Asked Question (FAQs)

What does “transverse nature” mean in the context of electromagnetic waves?

In the context of electromagnetic waves, “transverse nature” refers to the perpendicular oscillations of electric and magnetic fields. These oscillations occur at right angles to the direction in which the wave is traveling.

How do the electric and magnetic fields oscillate in electromagnetic waves?

The electric and magnetic fields in electromagnetic waves oscillate perpendicular to each other and to the direction of wave propagation. This perpendicular oscillation gives rise to the unique characteristics of electromagnetic waves.

What is the significance of the transverse nature of electromagnetic waves?

The transverse nature of electromagnetic wave is responsible for various phenomena and applications, such as polarization, interference, diffraction, and the ability to propagate through a vacuum.

How does the transverse nature allow electromagnetic waves to propagate through a vacuum?

Unlike other types of wave that require a medium for propagation, the transverse nature of electromagnetic wave enables them to travel through a vacuum because they don’t rely on the movement of particles for transmission.

What is polarization in the context of transverse electromagnetic waves?

Polarization refers to the alignment of the oscillations of the electric and magnetic fields in a specific direction perpendicular to the wave’s motion. This property has applications in areas like optics and communication.

Energy Stored In A Capacitor

Energy Stored In A Capacitor. A capacitor is an essential component in electronics that stores electrical energy in an electric field.

The energy stored in a capacitor is a direct result of the voltage applied across its terminals and the amount of charge it can hold. The formula to calculate the energy stored in a capacitor is given by:

Energy (E) = 0.5 × Capacitance (C) × Voltage (V)^2

Where:

- Energy (E) is the energy stored in the capacitor, measured in joules.

- Capacitance (C) is the measure of a capacitor’s ability to store charge, measured in farads (F).

- Voltage (V) is the potential difference across the capacitor’s terminals, measured in volts.

This formula demonstrates that the energy stored in a capacitor is proportional to the square of the voltage and the capacitance. Capacitors with higher capacitance or higher voltage can store more energy. When a capacitor is charged, it accumulates energy in the electric field between its plates. This stored energy can later be released when the capacitor is discharged.

The energy stored in a capacitor plays a crucial role in various electronic applications, including smoothing voltage fluctuations, filtering signals, and providing bursts of energy in circuits. Understanding how capacitors store and release energy is fundamental in designing efficient and reliable electronic systems.

Energy Stored in a Capacitor: Explained Further

A capacitor, a fundamental component in electronics, is not just about storing charge; it’s also a reservoir of energy in the form of an electric field.

This stored energy becomes vital in various electronic applications, and comprehending its calculation sheds light on the capacitor’s significance.

Formula and Components:

The energy stored in a capacitor is directly linked to the voltage across it and the amount of charge it can accumulate. The formula to calculate this energy is:

Energy (E) = 0.5 × Capacitance (C) × Voltage (V)^2

Capacitance (C): This property measures how much charge a capacitor can store for a given voltage. It is measured in farads (F).

Voltage (V): The potential difference between the capacitor’s plates, measured in volts. It’s the driving force behind the energy storage process.

Understanding the Equation:

The formula highlights that energy stored is proportional to both the capacitance and the square of the voltage. This means that doubling the voltage will result in a fourfold increase in energy, while doubling the capacitance will result in a twofold increase.

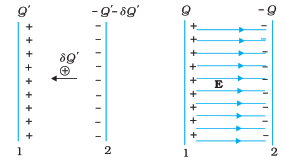

Energy Storage Process:

When a capacitor is connected to a power source, it charges as it accumulates electric potential energy. The energy is stored as an electric field between the capacitor’s plates.

This field exerts a force on the charges, preventing further accumulation of charge. Once fully charged, the capacitor contains energy that can be released when needed.

Applications:

Filtering and Smoothing: Capacitors are often used to filter out noise and voltage fluctuations from electrical signals, ensuring a stable output.

Energy Storage: In circuits like camera flashes, capacitors can quickly store energy and release it in a burst when needed.

Backup Power: Capacitors can provide temporary power during brief interruptions, allowing systems to shut down safely.

Timing Circuits: In combination with resistors, capacitors are crucial in creating time delays and oscillations in electronic circuits.

Conclusion:

The energy stored in a capacitors exemplifies the interplay between voltage, capacitance, and electric fields. This energy reservoir is pivotal in electronics, contributing to diverse applications that range from signal processing to energy bursts.

Understanding the nuances of energy storage enhances our ability to design efficient and responsive electronic systems.

Read More

Frequently Asked Question (FAQs)

What is the energy stored in a capacitor?

The energy stored in a capacitor refers to the electric potential energy stored in the electric field between its plates when it’s charged.

How is the energy stored in a capacitor calculated?

The energy stored in a capacitors can be calculated using the formula: (E) = 0.5 × Capacitance (C) × Voltage (V)^2.

What is capacitance?

Capacitance is a measure of a capacitor’s ability to store charge for a given voltage. It is measured in farads (F).

How does voltage affect the energy stored in a capacitor?

The energy stored in a capacitors is directly proportional to the square of the voltage applied across its terminals. Doubling the voltage results in a fourfold increase in stored energy.

Can a capacitor store an infinite amount of energy?

No, a capacitor’s energy storage is limited by its capacitance and voltage. It cannot store an infinite amount of energy.